Jadual Kandungan

Deepfakes are a rapidly growing concern in the digital world, as they have the potential to cause significant harm to individuals and society as a whole. As artificial intelligence and machine learning technologies become more and more complex, creating realistic and convincing deepfakes is becoming easier day by day. Deepfakes are manipulated videos or images that can be used for a variety of purposes, including spreading misinformation and identity theft. In fact, they have the ability to spread propaganda and misinformation that could undermine people’s trust in institutions, incite chaos and confusion, and upend the very fabric of our society. In this article, we’ll explore the dangers of deepfakes and provide practical tips on how to detect deepfake videos.

Apakah Deepfake?

We can define deepfake as a type of synthetic media that has been manipulated or generated using artificial intelligence (AI) algorithms. In other words, deepfakes use machine learning to create or alter audio, video, or images to depict events that did not occur or to portray individuals saying or doing things that they did not actually do.

Let’s create AI Images Using Midjourney and Dall-E 2

If you have seen videos of Mark Zuckerberg boasting about his total control of billions of people’s stolen data, Barack Obama using offensive words to describe Donald Trump, or Kit Harrington delivering a heartfelt apology for the disappointing finale of Game of Thrones, then you already have an idea about deepfakes. Deepfakes are the modern-day equivalent of photoshopping. These altered and manipulated digital media like videos, audio, and images can cause harm to both individuals and organizations, just like computer viruses. Identifying good deepfake videos can be exceptionally challenging; however, in contrast to computer viruses, anyone can produce good deep fakes.

Did you know that neural networks can create realistic people who have never existed before? By visiting the website orang ini tidak wujud or Dijana.Foto, you can see a vast array of faces generated by AI. In fact, none of the people you see on this website is real. You can find more of such AI wizardry in x ini tidak wujud website.

Pakar telah mengenal pasti tiga jenis atau kaedah deepfake yang paling berbahaya. Yang pertama ialah suara deepfake atau deepfake audio. Ini ialah suara yang dijana AI yang meniru suara orang sebenar. Audio deepfake kini merupakan teknik paling menguntungkan yang digunakan oleh penipu untuk memancing suara palsu mendalam. Sebagai contoh, pada 2019, penjenayah menggunakan teknologi suara klon untuk memeras $243,000 daripada Ketua Pegawai Eksekutif sebuah syarikat British Energy.

Kaedah kedua ialah video deepfake, yang boleh digunakan untuk memanipulasi pendapat awam dan mencuci otak orang dengan menyampaikan maklumat palsu secara meyakinkan. Video terkenal yang memaparkan Barack Obama dan Presiden Trump adalah contoh video sedemikian. Ia juga boleh digunakan untuk mencipta video palsu orang biasa, terutamanya untuk mencipta pornografi balas dendam.

This AI technology can also produce deep fake text that convincingly mimics the writings of real people as well as genuine social media posts. These fake accounts may look authentic and even attract followers over time. A group of these accounts can be easily used to slander a company or a product. The only way to differentiate such AI-generated text is to identify patterns in the language used, such as word choice and sentence structure, and detect inconsistencies.

Bahaya Teknologi Deepfake

Dalam dunia berita palsu dan maklumat palsu hari ini, orang ramai menggunakan teknologi palsu dalam untuk menyebarkan maklumat salah, mencipta naratif palsu, mencipta imej awam tertentu, menapis atau mengejek orang dan mencipta kandungan lucah.

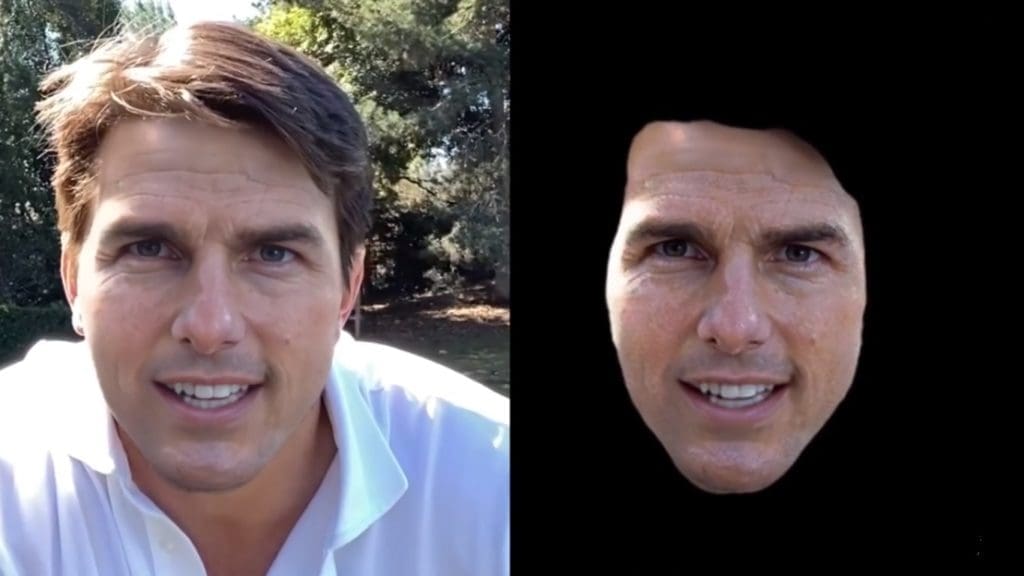

Deep fakes often target celebrities. Their fame and availability in media make them perfect for memes, and AI technology can easily create convincing deep fakes of them. A few years ago, TikTok was flooded with videos featuring actor Tom Cruise engaging in activities that were unusual for him, such as fooling around in an expensive men’s fashion store, exhibiting a coin trick, and playfully growling while singing a brief version of “Crash Into Me” by the Dave Matthews Band. Another example is the video of Yong Mei, a famous Chinese celebrity that went viral a few years ago. In this video, she was inserted into a 1983 Hong Kong TV series. The video got 240 million views before it was eventually removed by Chinese authorities. However, the creator, who was a fan of Young Mei, apologized publicly on Weibo and said he made the video to raise awareness about deepfake technology.

In politics, deepfakes can be used to create fake videos or images of political figures saying or doing things that they did not actually say or do. This can be done to spread misinformation or damage someone’s reputation. In 2019, a video of Nancy Pelosi was edited to make it seem like she was speaking oddly and looking drunk. It was shared globally, but Facebook did not remove it after being proven fake. Videos on Donald Trump’s story about a reindeer and Barack Obama’s public service announcement are other examples of this type of fake video.

Did you know that 96% of deep fakes are pornographic? A significant majority (99%) of the faces used in these manipulated videos belong to real women, including non-celebrities. The use of deep fakes can allow a person to effortlessly superimpose the image of an ex-girlfriend onto that of a porn actor and circulate it within their social circle. As a result, the victim may face the negative effects of being falsely accused of a taboo act, even if it never happened.

Deepfakes are also increasingly being used in financial scams. These scams typically involve scammers creating deepfakes of important people like CEOs or politicians and then using them to trick people into transferring money or sensitive information. For example, a deepfake video of a CEO might be used to persuade an employee to transfer money to a fraudulent account. In 2019, a group of scammers used the voice of a CEO of a UK-based energy company to impersonate him and trick an employee into transferring $243,000 to a fraudulent account.

Satu lagi aspek berbahaya deep fake ialah terdapat banyak alat mudah yang tersedia dalam talian untuk mencipta deep fake. Menurut Ben Coleman dari Reality Defender, mana-mana pelajar sekolah menengah rendah boleh mencipta palsu dalam menggunakan iPhone berusia lima tahun.

Beberapa Aplikasi Etika Deep Fakes

It is essential to remember that deep fake technology can have ethical applications in today’s world. One such example is enhancing the image quality of old or low-resolution videos. Instead of using the traditional upscale method, this technology redraws the image, allowing for changes in image quality. Additionally, deep fake technology can synchronize speech with lip movements, known as lip syncing. Perfecting lip movements to match any linguistic articulation would be a significant advancement in the field of film and TV dubbing. A practical example of this is the PSA featuring David Beckham aimed at combating malaria. As Beckham speaks nine languages, deep fake technology was used to adjust his lip movements to each language.

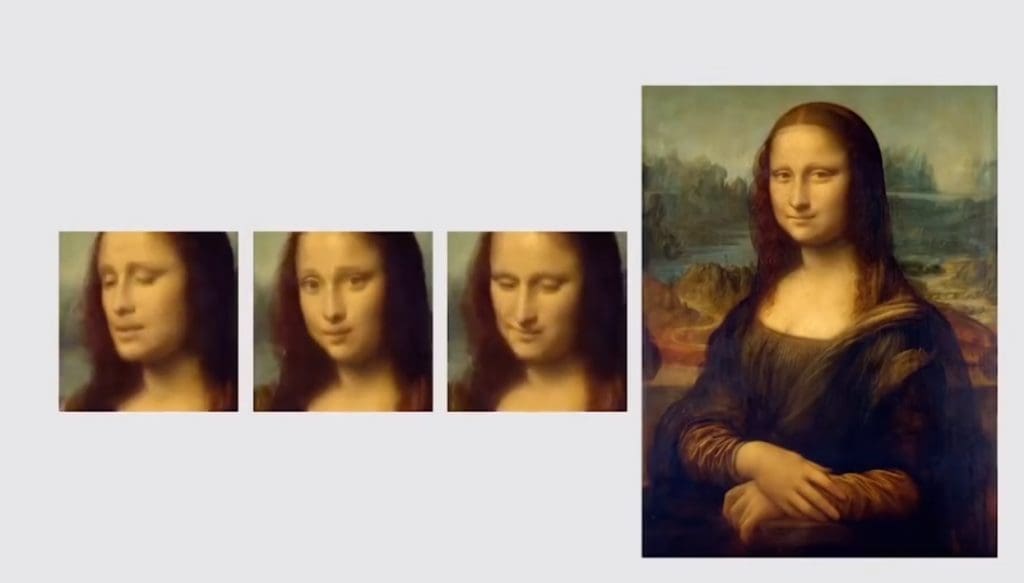

Potensi deep fake juga meluas kepada menghidupkan semula artis yang telah meninggal dunia seperti Salvador Dali di Muzium Salvador Dali di Florida. Selain itu, aplikasi unik teknologi ini adalah untuk menghidupkan seni. Sebagai contoh, makmal penyelidikan AI Samsung telah membolehkan Mona Lisa mempamerkan pergerakan di kepala, mata dan mulutnya. Pada masa hadapan, teknologi ini boleh menjimatkan masa dan wang industri filem.

Cara Mengenal Pasti Deepfakes

Cari Ketidakkonsistenan dalam Video

Deepfakes selalunya mempunyai ketidakkonsistenan halus yang memberikannya. Sebagai contoh, pencahayaan, bayang-bayang dan pantulan mungkin tidak sepadan dengan persekitaran, atau pergerakan orang itu mungkin kelihatan sedikit tidak semula jadi.

Tanda amaran biasa bagi video atau imej deepfake ialah mata yang tidak semula jadi, terutamanya jika mata seseorang kelihatan pelik atau jika ia tidak berkelip. Pergerakan mata yang berkelip dan semula jadi sukar untuk dipalsukan, jadi jika seseorang kelihatan merenung tanpa berkelip mata atau pergerakan mata mereka kelihatan pelik, ini mungkin petanda bahawa video itu telah dimanipulasi. Selain itu, apabila orang bercakap antara satu sama lain, mata mereka biasanya bergerak secara semula jadi, dan sukar untuk menirunya dengan tepat dalam video palsu.

An unpropotional face and body is another sign of a deepfake. This is because the AI algorithms that create deepfakes can struggle to replicate the proportions of a person’s face and body accurately, which results in a distorted or exaggerated appearance. If a person looks strange or weird when they turn their head to the side, or if their movements seem disconnected or unnatural from one moment to the next, it might be a sign that a video has been changed. Mismatched skin tones and oddly placed shadows are two other important clues.

Semak Kualiti Audio

Deepfake mungkin mempunyai audio yang tidak sepadan dengan pergerakan bibir orang atau nada suara mereka. Dengar dengan teliti untuk melihat sama ada audio kedengaran semula jadi dan sepadan dengan konteks video. Apabila membuat deepfake, pencipta sering memberi lebih perhatian kepada visual daripada audio. Tanda-tanda audio palsu boleh termasuk pergerakan bibir yang tidak sepadan dengan perkataan yang dituturkan, suara yang berbunyi robotik, sebutan perkataan pelik, bunyi latar belakang digital atau bahkan ketiadaan audio sepenuhnya.

Gunakan Teknologi untuk Mengenal Pasti Palsu Dalam

The advancements in deep fake technology have led to the development of protection tools. The University of Buffalo has created a tool that automatically identifies deep fake photos. This tool examines the light reflection in the eyes to distinguish between real and fake photos. Generally, when we look at something, the image we look at is reflected in our eyes, so in genuine photos, both eyes show the same shape and color of reflection. However, computers cannot generate identical reflections in the eyes since they combine multiple photos to generate one.

Moreover, Intel has introduced an AI tool named Penangkap Palsu that can identify whether a video has been altered using deepfake technology in real-time. This technology claims to achieve a 96% accuracy rate and detect deepfakes within milliseconds. Unlike other deepfake detectors that rely on deep learning to analyze a video’s raw data for manipulation signs, FakeCatcher looks for human-like indicators, such as subtle changes in blood flow in the pixels of a video.

Terdapat juga alat lain untuk menganalisis bunyi dan foto digital. Struktur hingar semula jadi dan dijana komputer boleh berbeza-beza dengan ketara, membantu kami membezakan antara foto tulen dan palsu.

Gabungan untuk Asal dan Keaslian Kandungan, yang diketuai oleh Adobe, Microsoft, Intel dan BBC, juga telah membangunkan piawaian untuk membezakan antara kandungan yang dijana komputer dan kandungan sebenar. Sehingga ia diterima pakai secara meluas, potensi deepfake untuk penggunaan berniat jahat adalah ketara.

Cara Kekal Selamat daripada Deepfakes

You should always exercise caution when consuming information online, especially when it comes to sensitive topics that can cause conflict or provoke intense emotions. Be alert to possible manipulations or distortions of facts that can be used to push a particular agenda or influence public opinion. Always seek out multiple independent sources of information to verify the accuracy of online content. Don’t rely only on video, photographs, or audio on someone’s profile, as these may be deep fakes or altered. It’s important to do your research and cross-check information from different sources to ensure its authenticity. Finally, stay away from synthetic social networks and fake social media accounts.

Untuk mengelak daripada menjadi mangsa deepfake, pastikan anda tidak menyiarkan maklumat peribadi tentang diri anda dalam talian kerana ia boleh digunakan untuk kecurian identiti atau tujuan berniat jahat yang lain. Berhati-hati tentang jenis maklumat yang anda kongsi dan dengan siapa anda berkongsinya, dan sentiasa ambil langkah untuk melindungi privasi dan keselamatan anda dalam talian.

Adalah penting untuk mendidik individu tentang bahaya pemalsuan dalam dan membangunkan teknologi baharu untuk mengesan dan memerangi penggunaannya. Penciptaan dan pengedaran palsu dalam mesti dikawal selia, dan harus ada akibat undang-undang bagi mereka yang menggunakan palsu dalam dengan niat jahat.

Kesimpulan

Deepfakes are a technology that can have severe impacts on people all over the globe, including damage to reputation, financial loss, and identity theft. The only way to stay safe from deepfakes is by understanding the technology behind deepfakes and using deepfake detection tools; we must always remain vigilant, verify information sources, and stay up-to-date about the latest developments in technology. Ultimately, the key to prevent the spread of deepfakes is through education and awareness, so let’s all stay informed and stay safe in this digital age.

If you enjoyed this article then you may also like to read panduan komprehensif kami untuk ChatGPT.

Komen0