目录

Deepfakes are a rapidly growing concern in the digital world, as they have the potential to cause significant harm to individuals and society as a whole. As artificial intelligence and machine learning technologies become more and more complex, creating realistic and convincing deepfakes is becoming easier day by day. Deepfakes are manipulated videos or images that can be used for a variety of purposes, including spreading misinformation and identity theft. In fact, they have the ability to spread propaganda and misinformation that could undermine people’s trust in institutions, incite chaos and confusion, and upend the very fabric of our society. In this article, we’ll explore the dangers of deepfakes and provide practical tips on how to detect deepfake videos.

什么是深度伪造?

We can define deepfake as a type of synthetic media that has been manipulated or generated using artificial intelligence (AI) algorithms. In other words, deepfakes use machine learning to create or alter audio, video, or images to depict events that did not occur or to portray individuals saying or doing things that they did not actually do.

Let’s create AI Images Using Midjourney and Dall-E 2

If you have seen videos of Mark Zuckerberg boasting about his total control of billions of people’s stolen data, Barack Obama using offensive words to describe Donald Trump, or Kit Harrington delivering a heartfelt apology for the disappointing finale of Game of Thrones, then you already have an idea about deepfakes. Deepfakes are the modern-day equivalent of photoshopping. These altered and manipulated digital media like videos, audio, and images can cause harm to both individuals and organizations, just like computer viruses. Identifying good deepfake videos can be exceptionally challenging; however, in contrast to computer viruses, anyone can produce good deep fakes.

Did you know that neural networks can create realistic people who have never existed before? By visiting the website 这个人不存在 or 生成照片, you can see a vast array of faces generated by AI. In fact, none of the people you see on this website is real. You can find more of such AI wizardry in 这个 x 不存在 website.

专家们已经确定了三种最危险的深度伪造类型或方法。第一个是深度伪造的声音或音频的深度伪造。这是一种人工智能生成的声音,非常模仿真人的声音。Deepfake 音频是目前诈骗者用于深度伪造语音钓鱼的最有利可图的技术。例如,2019 年,犯罪分子使用克隆语音技术向一家英国能源公司的首席执行官勒索 243,000 美元。

第二种方法是深度伪造视频,它可以通过令人信服地呈现虚假信息来纵舆论和洗脑。以巴拉克·奥巴马和特朗普总统为主角的臭名昭著的视频就是此类视频的例子。它们还可以被用来制作普通人的虚假视频,尤其是制作报复性色情内容。

This AI technology can also produce deep fake text that convincingly mimics the writings of real people as well as genuine social media posts. These fake accounts may look authentic and even attract followers over time. A group of these accounts can be easily used to slander a company or a product. The only way to differentiate such AI-generated text is to identify patterns in the language used, such as word choice and sentence structure, and detect inconsistencies.

深度伪造技术的危险

在当今充斥着假新闻和虚假信息的世界中,人们使用深度伪造技术来传播错误信息、制造虚假叙述、创建特定的公众形象、审查或嘲笑人们以及创建色情内容。

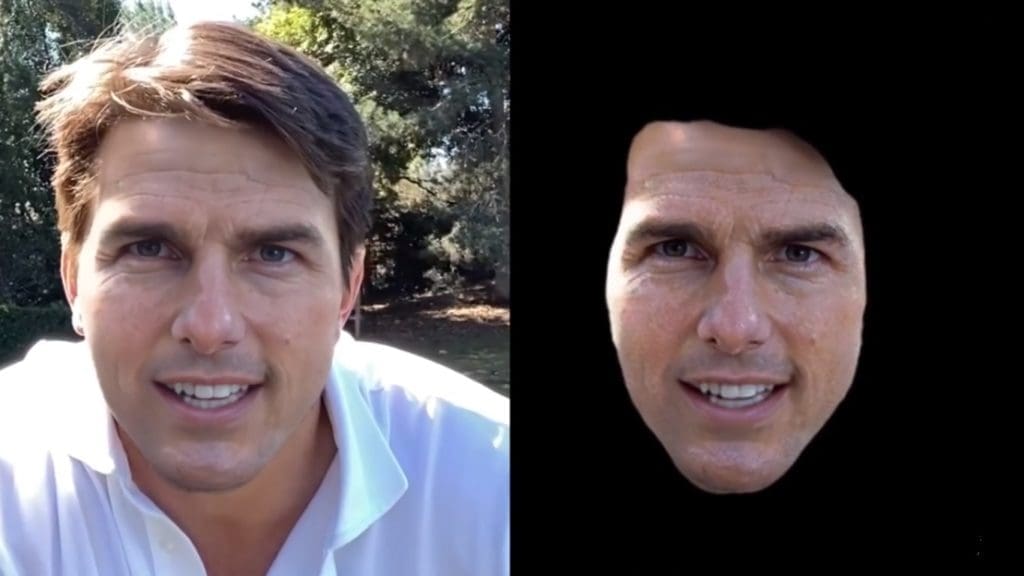

Deep fakes often target celebrities. Their fame and availability in media make them perfect for memes, and AI technology can easily create convincing deep fakes of them. A few years ago, TikTok was flooded with videos featuring actor Tom Cruise engaging in activities that were unusual for him, such as fooling around in an expensive men’s fashion store, exhibiting a coin trick, and playfully growling while singing a brief version of “Crash Into Me” by the Dave Matthews Band. Another example is the video of Yong Mei, a famous Chinese celebrity that went viral a few years ago. In this video, she was inserted into a 1983 Hong Kong TV series. The video got 240 million views before it was eventually removed by Chinese authorities. However, the creator, who was a fan of Young Mei, apologized publicly on Weibo and said he made the video to raise awareness about deepfake technology.

In politics, deepfakes can be used to create fake videos or images of political figures saying or doing things that they did not actually say or do. This can be done to spread misinformation or damage someone’s reputation. In 2019, a video of Nancy Pelosi was edited to make it seem like she was speaking oddly and looking drunk. It was shared globally, but Facebook did not remove it after being proven fake. Videos on Donald Trump’s story about a reindeer and Barack Obama’s public service announcement are other examples of this type of fake video.

Did you know that 96% of deep fakes are pornographic? A significant majority (99%) of the faces used in these manipulated videos belong to real women, including non-celebrities. The use of deep fakes can allow a person to effortlessly superimpose the image of an ex-girlfriend onto that of a porn actor and circulate it within their social circle. As a result, the victim may face the negative effects of being falsely accused of a taboo act, even if it never happened.

Deepfakes are also increasingly being used in financial scams. These scams typically involve scammers creating deepfakes of important people like CEOs or politicians and then using them to trick people into transferring money or sensitive information. For example, a deepfake video of a CEO might be used to persuade an employee to transfer money to a fraudulent account. In 2019, a group of scammers used the voice of a CEO of a UK-based energy company to impersonate him and trick an employee into transferring $243,000 to a fraudulent account.

深度伪造的另一个危险方面是,网上有许多简单的工具可以创建深度伪造。根据 Reality Defender 的 Ben Coleman 的说法,任何初中生都可以使用五年前的 iPhone 制作深度伪造品。

深度造假的一些道德应用

It is essential to remember that deep fake technology can have ethical applications in today’s world. One such example is enhancing the image quality of old or low-resolution videos. Instead of using the traditional upscale method, this technology redraws the image, allowing for changes in image quality. Additionally, deep fake technology can synchronize speech with lip movements, known as lip syncing. Perfecting lip movements to match any linguistic articulation would be a significant advancement in the field of film and TV dubbing. A practical example of this is the PSA featuring David Beckham aimed at combating malaria. As Beckham speaks nine languages, deep fake technology was used to adjust his lip movements to each language.

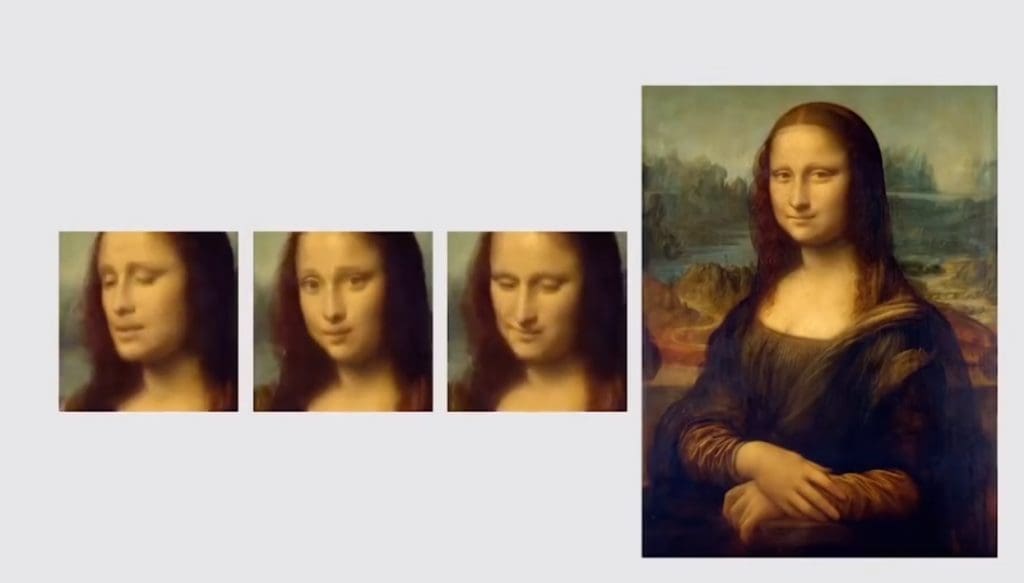

深度伪造的潜力还延伸到复活已故艺术家,例如佛罗里达州萨尔瓦多·达利博物馆的萨尔瓦多·达利。此外,这项技术的一个独特应用是为艺术制作动画。例如,三星的人工智能研究实验室使蒙娜丽莎能够展示她的头部、眼睛和嘴巴的动作。未来,这项技术可以为电影业节省时间和金钱。

如何识别深度伪造品

查找视频中的不一致之处

深度伪造通常存在细微的不一致之处,从而暴露了它们。例如,光线、阴影和反射可能与周围环境不匹配,或者人的动作可能看起来略显不自然。

深度伪造视频或图像的一个常见警告信号是眼睛不自然,尤其是当一个人的眼睛看起来很奇怪或不眨眼时。眨眼和自然的眼球运动很难伪造,因此如果有人看起来不眨眼就盯着看,或者他们的眼球运动看起来很奇怪,这可能表明视频已纵。此外,当人们互相交谈时,他们的眼睛通常会自然移动,在虚假视频中很难准确地模仿这一点。

An unpropotional face and body is another sign of a deepfake. This is because the AI algorithms that create deepfakes can struggle to replicate the proportions of a person’s face and body accurately, which results in a distorted or exaggerated appearance. If a person looks strange or weird when they turn their head to the side, or if their movements seem disconnected or unnatural from one moment to the next, it might be a sign that a video has been changed. Mismatched skin tones and oddly placed shadows are two other important clues.

检查音频质量

Deepfakes 的音频可能与人的嘴唇动作或语气不匹配。仔细聆听,看看音频听起来是否自然并与视频的上下文相匹配。在制作深度伪造品时,创作者通常更关注视觉效果而不是音频。虚假音频的迹象可能包括与口语不匹配的嘴唇动作、听起来像机器人的声音、奇怪的单词发音、数字背景噪音,甚至完全没有音频。

使用技术识别深度伪造

The advancements in deep fake technology have led to the development of protection tools. The University of Buffalo has created a tool that automatically identifies deep fake photos. This tool examines the light reflection in the eyes to distinguish between real and fake photos. Generally, when we look at something, the image we look at is reflected in our eyes, so in genuine photos, both eyes show the same shape and color of reflection. However, computers cannot generate identical reflections in the eyes since they combine multiple photos to generate one.

Moreover, Intel has introduced an AI tool named 假捕手 that can identify whether a video has been altered using deepfake technology in real-time. This technology claims to achieve a 96% accuracy rate and detect deepfakes within milliseconds. Unlike other deepfake detectors that rely on deep learning to analyze a video’s raw data for manipulation signs, FakeCatcher looks for human-like indicators, such as subtle changes in blood flow in the pixels of a video.

还有其他工具可以分析数字噪点和照片。自然噪点和计算机生成的噪点的结构可能会有很大差异,这有助于我们区分真假照片。

由 Adobe、Microsoft、Intel 和 BBC 领导的内容来源和真实性联盟也制定了一项标准来区分计算机生成的内容和真实内容。在被广泛采用之前,深度伪造品被恶意使用的可能性是巨大的。

如何远离深度伪造

You should always exercise caution when consuming information online, especially when it comes to sensitive topics that can cause conflict or provoke intense emotions. Be alert to possible manipulations or distortions of facts that can be used to push a particular agenda or influence public opinion. Always seek out multiple independent sources of information to verify the accuracy of online content. Don’t rely only on video, photographs, or audio on someone’s profile, as these may be deep fakes or altered. It’s important to do your research and cross-check information from different sources to ensure its authenticity. Finally, stay away from synthetic social networks and fake social media accounts.

为避免成为深度伪造的受害者,请确保不要在网上发布有关您自己的个人信息,因为它可能被用于身份盗用或其他恶意目的。请谨慎对待您共享的信息类型以及与谁共享信息,并始终采取措施保护您的在线隐私和安全。

教育个人了解深度造假的危险并开发新技术来检测和打击其使用至关重要。深度造假的创作和传播必须受到监管,恶意使用深度造假的人应该受到法律制裁。

结论

Deepfakes are a technology that can have severe impacts on people all over the globe, including damage to reputation, financial loss, and identity theft. The only way to stay safe from deepfakes is by understanding the technology behind deepfakes and using deepfake detection tools; we must always remain vigilant, verify information sources, and stay up-to-date about the latest developments in technology. Ultimately, the key to prevent the spread of deepfakes is through education and awareness, so let’s all stay informed and stay safe in this digital age.

If you enjoyed this article then you may also like to read 我们的 ChatGPT 综合指南.

评论0